I’m currently a PhD research assistant at the Institute for Computer Graphics and Vision (ICG) at Graz University of Technology. My research interests are primarily in the field of simultaneous localization and mapping (SLAM) and visual odometry (VO) with special focus on real-time application. I received my Master’s and Bachelor’s degree in Biomedical Engineering from Graz University of Technology and also spent one year at the University Carlos III in Madrid, Spain.

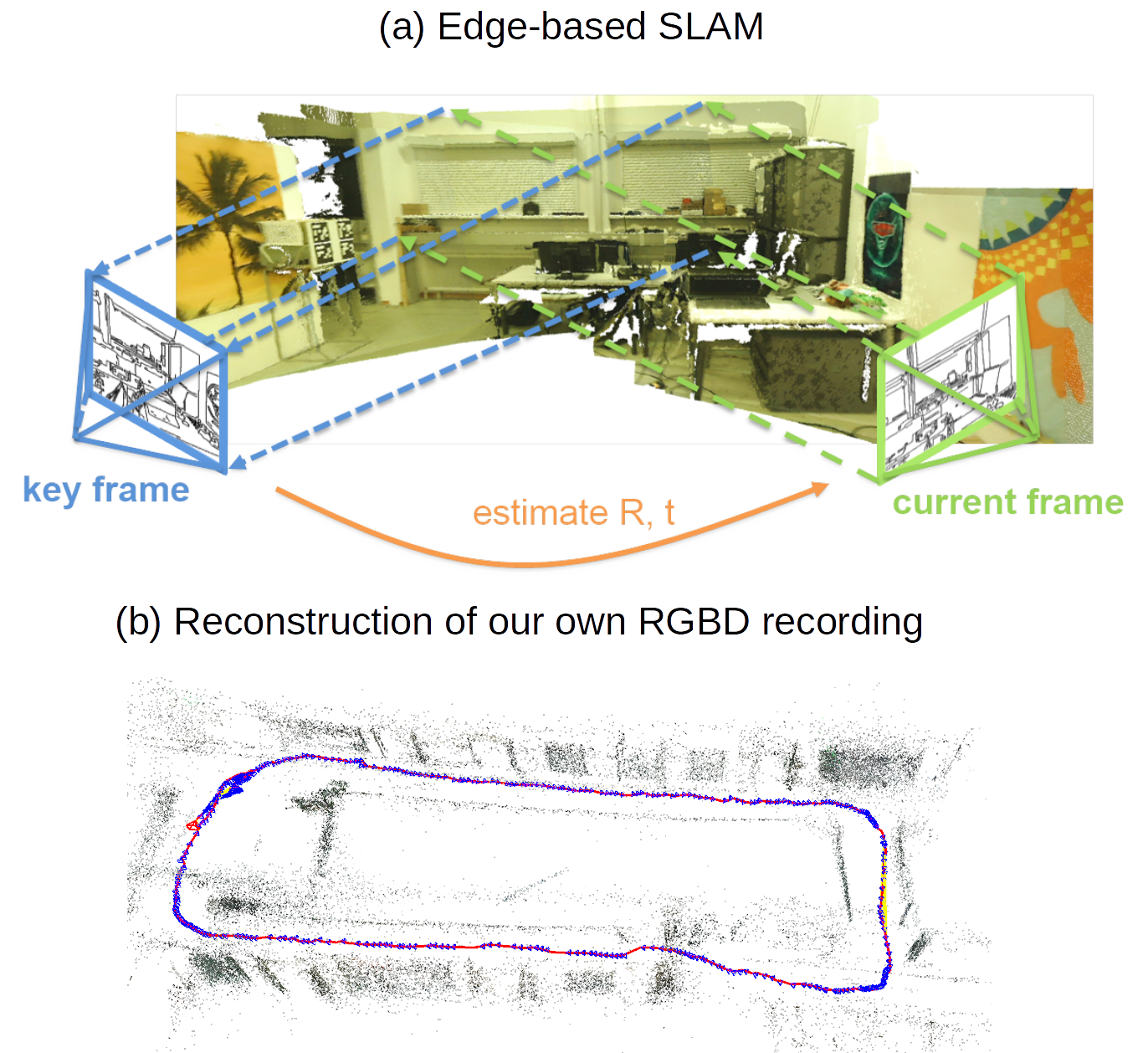

Our newest work with the title “RESLAM: A real-time robust edge-based SLAM system” was accepted for presentation at ICRA 2019. This year’s ICRA will be in Montreal, Canada from May 20-24, 2019.

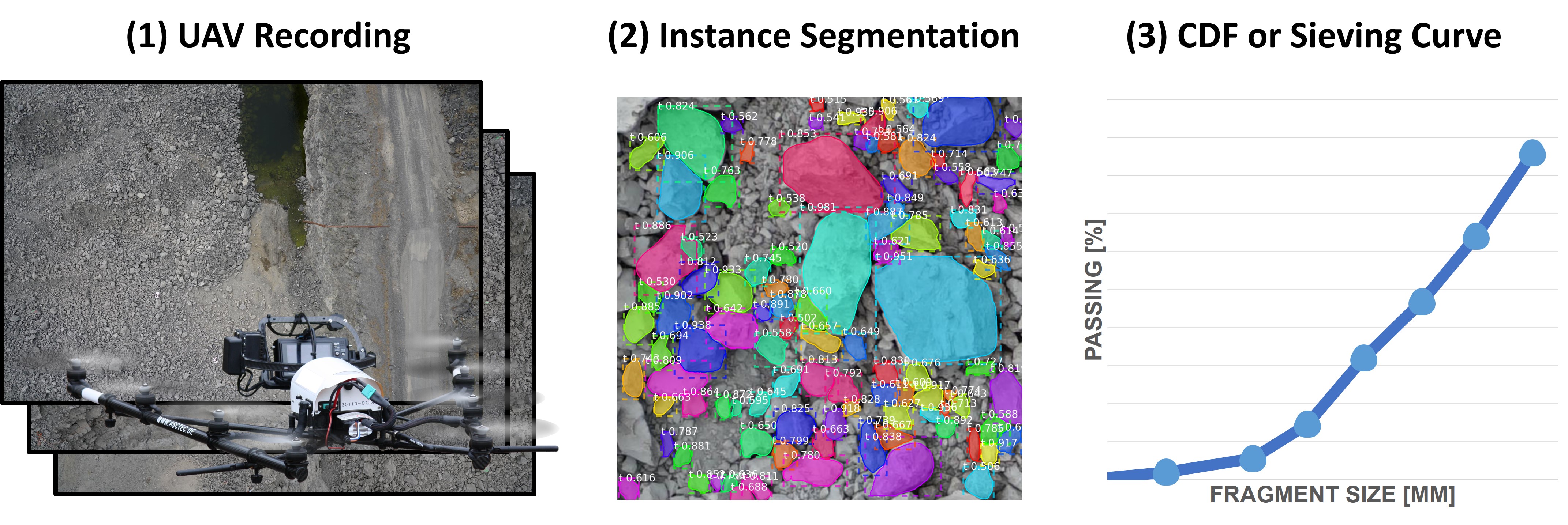

Our paper with the title “Automatic Muck Pile Characterization from UAV Images” was accepted for presentation at the UAVg 2019 that will be in Enschede, Netherlands from June 10-12, 2019

Find me on Google Scholar or at TU Graz.

In open pit mining it is essential for processing and production scheduling to receive fast and accurate information about the fragmentation of a muck pile after a blast. In this work, we propose a novel machine-learning method that characterizes the muck pile directly from UAV images. In contrast to state-of-the-art approaches, that require heavy user interaction, expert knowledge and careful threshold settings, our method works fully automatically. We compute segmentation masks, bounding boxes and confidence values for each individual fragment in the muck pile on multiple scales to generate a globally consistent segmentation. Additionally, we recorded lab and real-world images to generate our own dataset for training the network. Our method shows very promising quantitative and qualitative results in all our experiments. Further, the results clearly indicate that our method generalizes to previously unseen data.

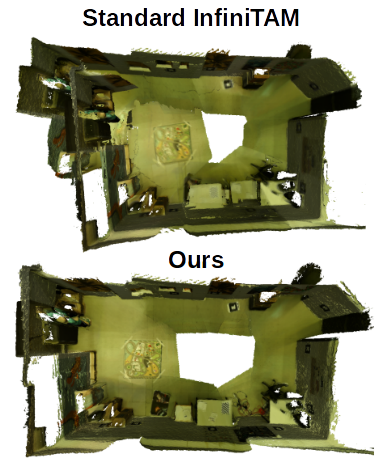

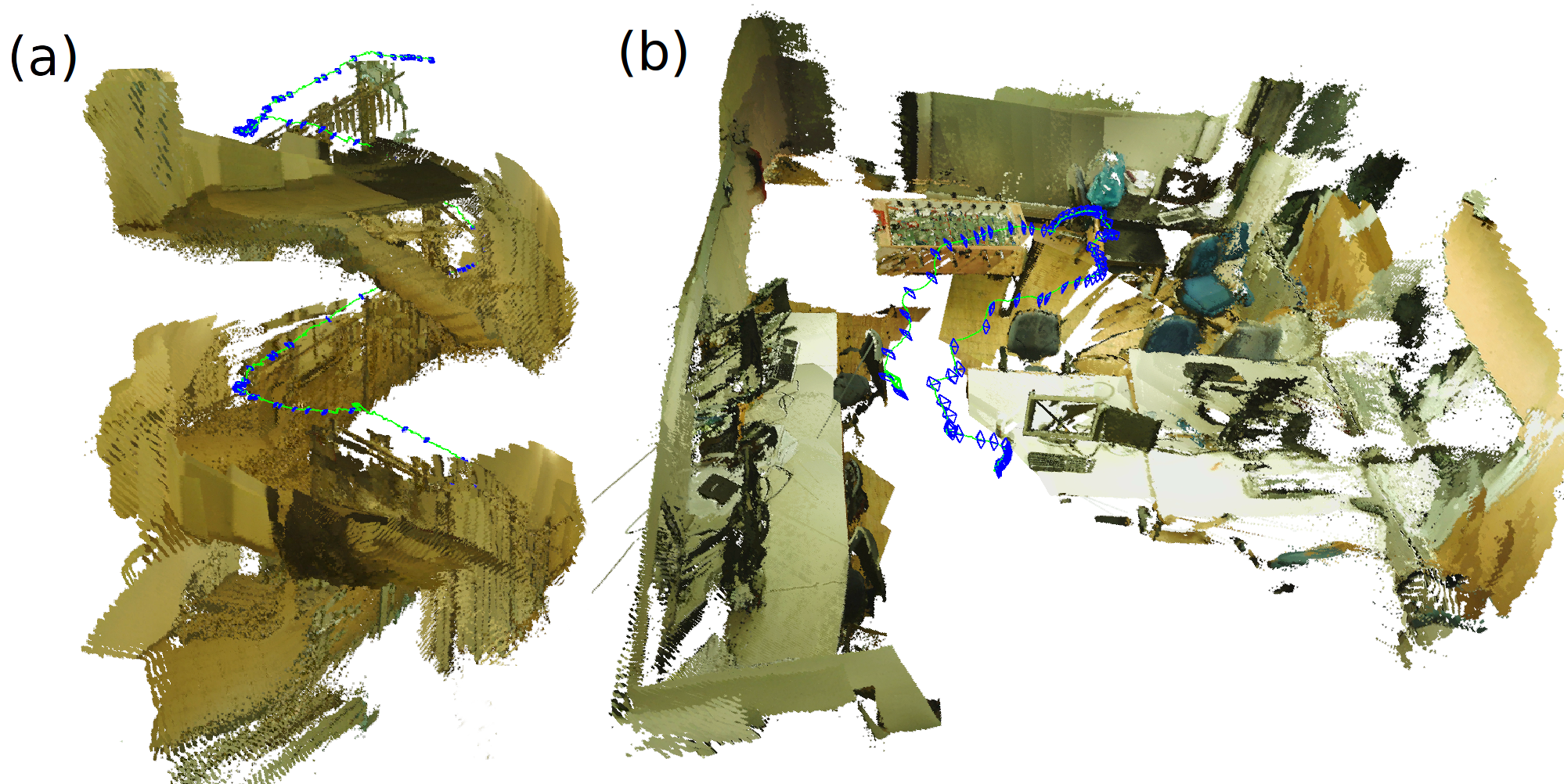

Simultaneous Localization and Mapping is a key requirement for many practical applications in robotics. In this work, we present RESLAM, a novel edge-based SLAM system for RGBD sensors. Due to their sparse representation, larger convergence basin and stability under illumination changes, edges are a promising alternative to feature-based or other direct approaches. We build a complete SLAM pipeline with camera pose estimation, sliding window optimization, loop closure and relocalisation capabilities that utilizes edges throughout all steps. In our system, we additionally refine the initial depth from the sensor, the camera poses and the camera intrinsics in a sliding window to increase accuracy. Further, we introduce an edge-based verification for loop closures that can also be applied for relocalisation. We evaluate RESLAM on wide variety of benchmark datasets that include difficult scenes and camera motions and also present qualitative results. We show that this novel edge-based SLAM system performs comparable to state-of-the-art methods, while running in real-time on a CPU. RESLAM is available as open-source software.

Best Paper Award

We present Hough Networks: a novel method that combines the idea of Hough Forests with Convolutional Neural Networks. Similar to Hough Forests, we perform a simultaneous classification and regression on densely-extracted image patches. But instead of a Random Forest we utilize a CNN which is capable of learning higher-order feature representations and does not rely on any handcrafted features. Applying a CNN at patch level allows the segmentation of the image into foreground and background. Furthermore, the structure of a CNN supports efficient inference of patches extracted from a regular grid. We evaluate the proposed Huogh Networks on two computer vision tasks: head pose estimation and facial feature localization. Our method achieves at least state-of-the-art performance without sacrificing versatility which allows extension to many other applications.

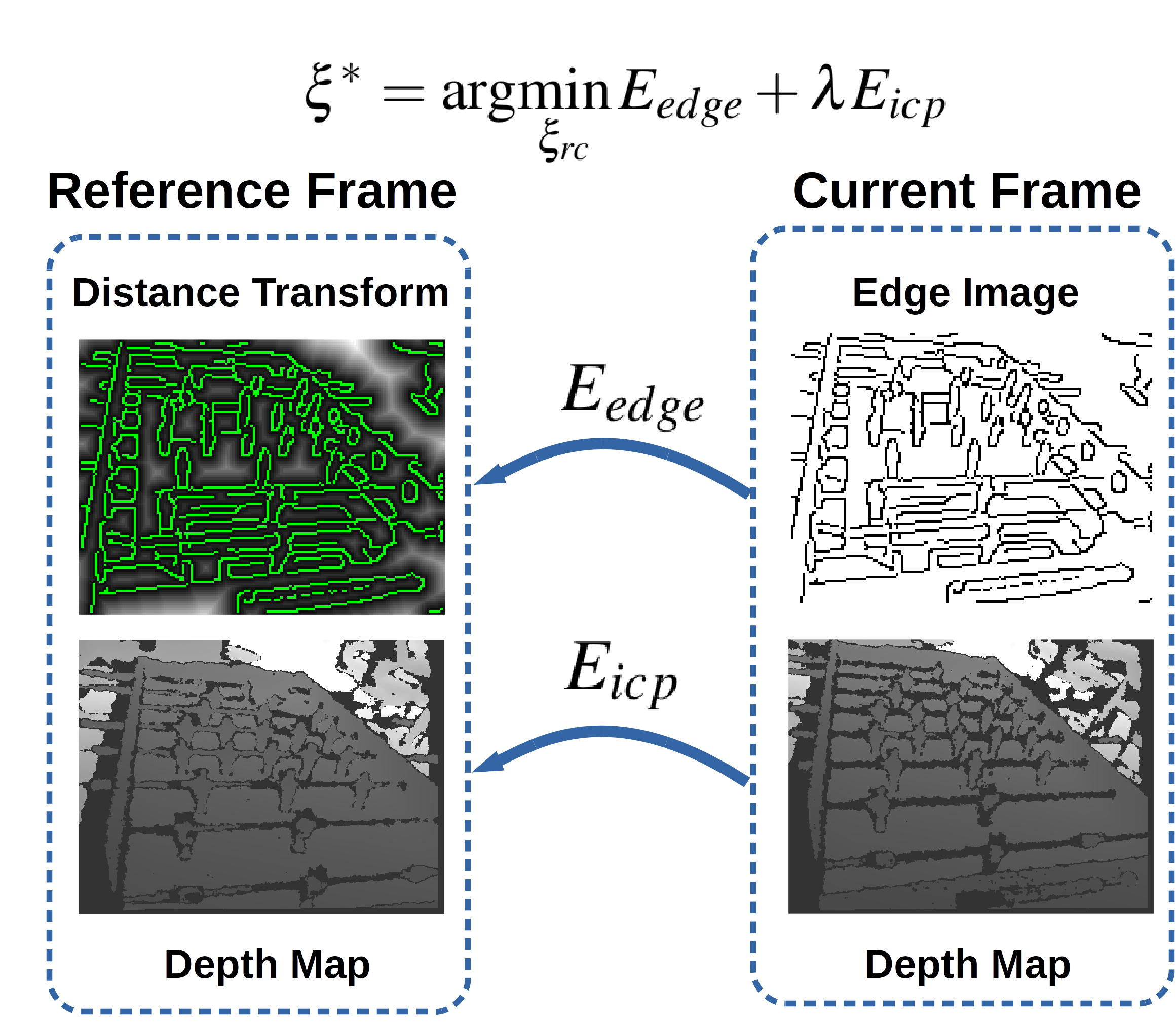

We propose a robust visual odometry system for RGBD sensors. The core of our method is a combination of edge images and depth maps for joint camera pose estimation. Edges are more stable under varying lighting conditions than raw intensity values and depth maps further add stability in poorly textured environments. This leads to higher accuracy and robustness in scenes, where feature- or photoconsistency-based approaches often fail. We demonstrate the robustness of our method under challenging conditions on various real-world scenarios recorded with our own RGBD sensor. Further, we evaluate on several sequences from standard benchmark datasets covering a wide variety of scenes and camera motions. The results show that our method performs best in terms of trajectory accuracy for most of the sequences indicating that the chosen combination of edge and depth terms in the cost function is suitable for a multitude of scenes.

In this work, we present a real-time robust edgebased visual odometry framework for RGBD sensors (REVO). Even though our method is independent of the edge detection algorithm, we show that the use of state-of-the-art machine-learned edges gives significant improvements in terms of robustness and accuracy compared to standard edge detection methods. In contrast to approaches that heavily rely on the photo-consistency assumption, edges are less influenced by lighting changes and the sparse edge representation offers a larger convergence basin while the pose estimates are also very fast to compute. Further, we introduce a measure for tracking quality, which we use to determine when to insert a new key frame. We show the feasibility of our system on realworld datasets and extensively evaluate on standard benchmark sequences to demonstrate the performance in a wide variety of scenes and camera motions. Our framework runs in real-time on the CPU of a laptop computer and is available online.

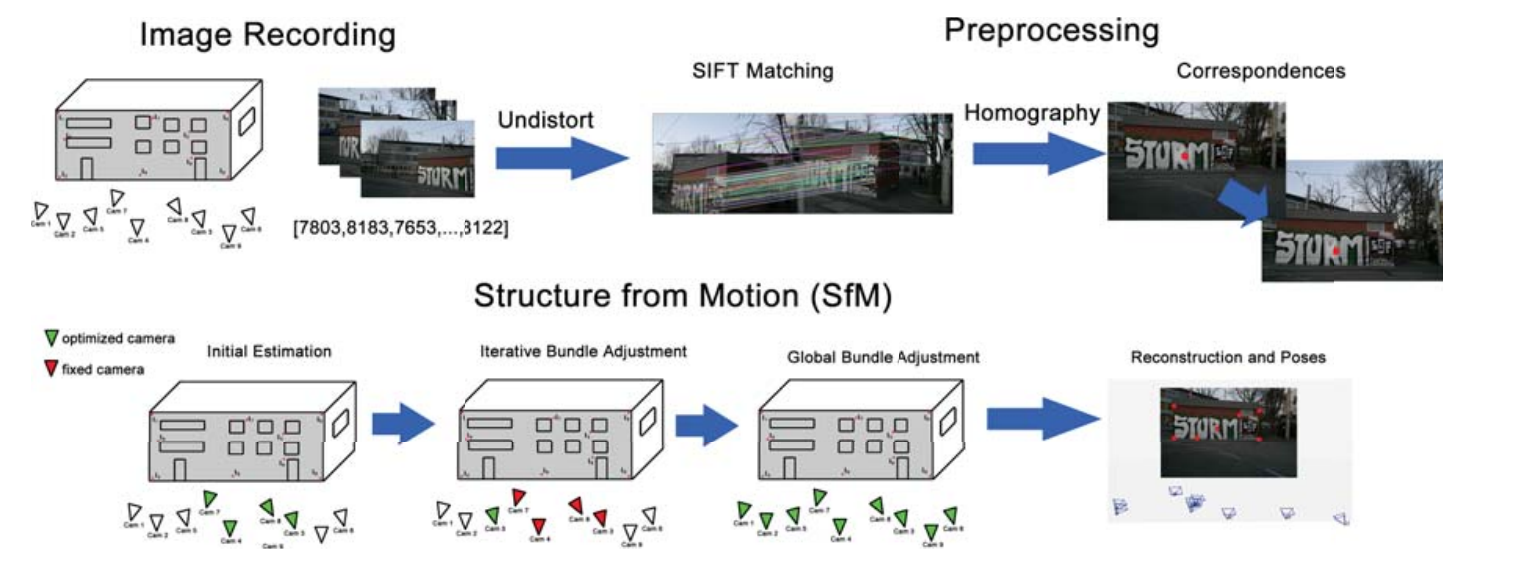

In this paper, we present an idea for a sparse approach to calculate camera poses from RGB images and laser distance measurements to perform subsequent facade reconstruction. The core idea is to guide the image recording process by choosing distinctive features with the laser range finder, e.g. building or window corners. From these distinctive features, we can establish correspondences between views to compute metrically accurate camera poses from just a few precise measurements. In our experiments, we achieve reasonable results in building facade reconstruction with only a fraction of features compared to standard structure from motion.

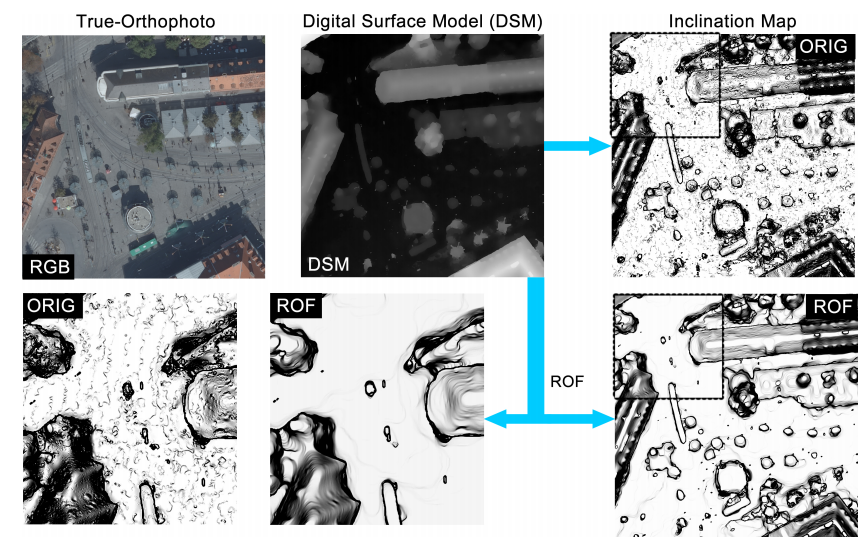

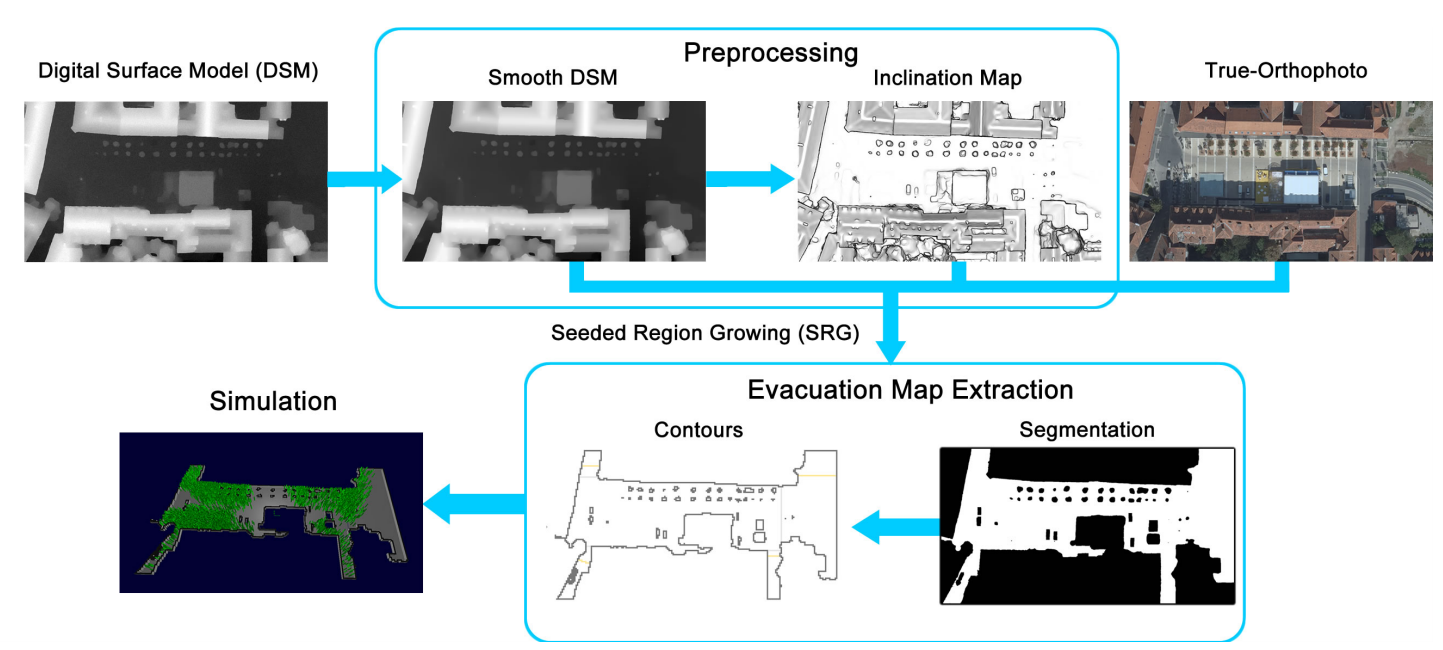

In this paper, we propose a novel, efficient and fast method to extract the walkable area from high-resolution aerial images for the purpose of computer-aided evacuation simulation for major public events. Compared to previous work, where authors only extracted roads and streets or solely focused on indoor scenarios, we present an approach to fully segment the walkable area of large outdoor environments. We address this challenge by modeling human movements in the terrain with a sophisticated seeded region growing algorithm (SRG), which utilizes digital surface models, true-orthophotos and inclination maps computed from aerial images. Further, we propose a novel annotation and scoring scheme especially developed for assessing the quality of the extracted evacuation maps. Finally, we present an extensive quantitative and qualitative evaluation, where we show the feasibility of our approach by evaluating different combinations of SRG methods and parameter settings on several real-world scenarios.

Computer-aided evacuation simulation is a very import preliminary step when planning safety measures for major public events. We propose a novel, efficient and fast method to extract the walkable area from highresolution aerial images for the purpose of evacuation simulation. In contrast to previous work, where the authors only extracted streets and roads or worked on indoor scenarios, we present an approach to accurately segment the walkable area of large outdoor areas. For this task we use a sophisticated seeded region growing (SRG) algorithm incorporating the information of digital surface models, true-orthophotos and inclination maps calculated from aerial images. Further, we introduce a new annotation and evaluation scheme especially designed for assessing the segmentation quality of evacuation maps. An extensive qualitative and quantitative evaluation, where we study various combinations of SRG methods and parameter settings by the example of different real-world scenarios, shows the feasibility of our approach.

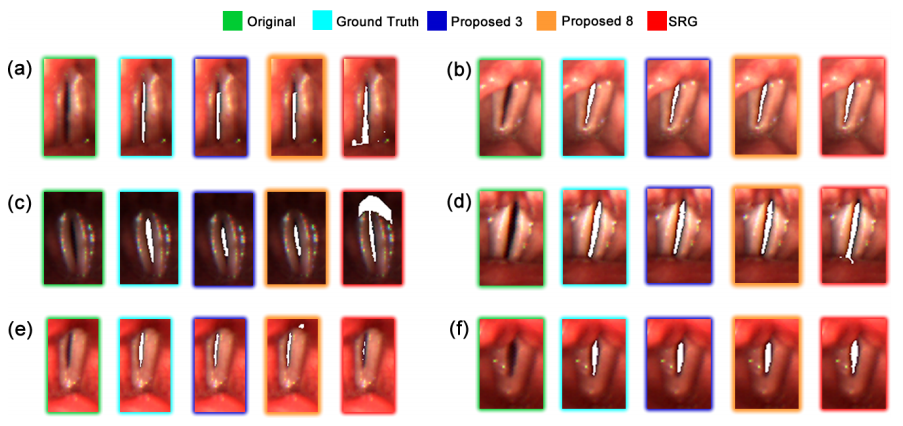

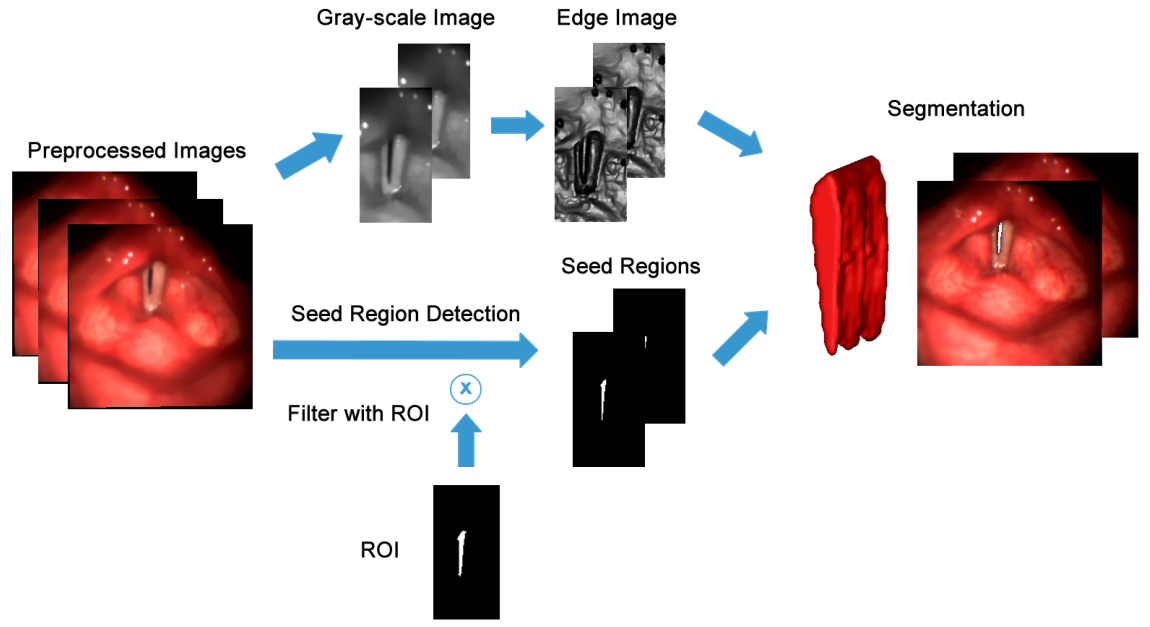

Verbal communication plays an important role in our economy. Laryngeal high-speed videos have emerged as a state of the art method to investigate vocal fold vibrations in the context of voice disorders affecting verbal communication. Segmentation of the glottis from these videos is required to analyze vocal fold vibrations. The vast amount of data produced makes manual segmentation impossible in every day clinical applications. Therefore, computer-aided, automatic segmentation is essential for the use of high-speed videos. In this work a novel, fully automatic glottis segmentation method involving motion compensation, salient region detection and 3D Geodesic Active Contour segmentation is presented. By using color information and establishing spatio-temporal volumes, the method overcomes reported problems in related work regarding low contrast and multiple glottal areas. Efficient computation is achieved by parallelized implementation using graphics adapters and NVidia CUDA. A comparison to the seeded region growing based clinical standard shows the benefits of the proposed method in terms of higher segmentation accuracy on manually annotated evaluation data.

Laryngeal high-speed videos are a state of the art method to investigate vocal fold vibration but the vast amount of data produced prevents it from being used in clinical applications. Segmentation of the glottal gap is important for excluding irrelevant data from video frames for subsequent analysis. We present a novel, fully automatic segmentation method involving rigid motion compensation, saliency detection and 3D geodesic active contours. By using the whole color information and establishing spatio-temporal volumes, our method deals with problems due to low contrast or multiple opening areas. Efficient computation is achieved by parallelized implementation using modern graphics adapters and NVidia CUDA. A comparison to a semi-automatic seeded region growing method shows that we achieve improved segmentation accuracy.

If you have any questions about my research, just drop me an e-mail!